Contacts:

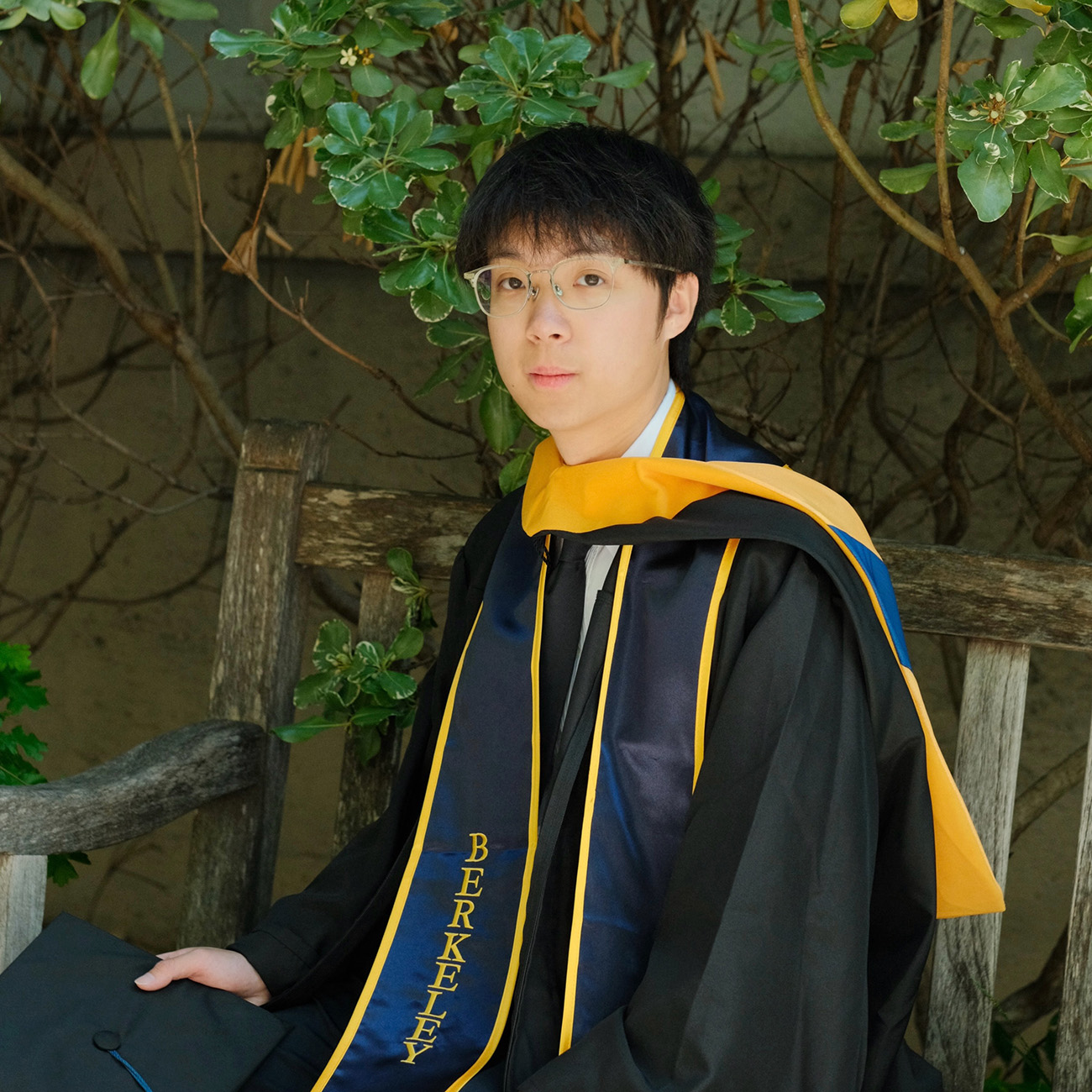

Jacky Kwok

Jacky is a PhD student in the Department of Computer Science at Stanford University, advised by Professors Marco Pavone and Azalia Mirhoseini. His research lies at the intersection of machine learning, systems, and robotics, with a particular focus on test-time scaling and foundation models for robotics. He is part of the Autonomous Systems Lab and the Scaling Intelligence Lab.

Before joining Stanford, Jacky received his Bachelor’s and Master’s degrees in Computer Science at UC Berkeley, where he conducted research at the Sky Computing Lab, Berkeley AI Research (BAIR) Lab, and iCyPhy Center. His master’s thesis focused on developing efficient and reliable systems for reinforcement learning and robotics under the guidance of Professors Ion Stoica and Edward Ashford Lee. Outside of research, he enjoys playing tennis, going to concerts, and skiing.

ASL Publications

-

J. Kwok, C. Agia, R. Sinha, M. Foutter, S. Li, I. Stoica, A. Mirhoseini, and M. Pavone, “RoboMonkey: Scaling Test-Time Sampling and Verification for Vision-Language-Action Models,” in Conf. on Robot Learning, Seoul, Korea, 2025. (In Press)

Abstract: Vision-Language-Action (VLA) models have demonstrated remarkable capabilities in visuomotor control, yet ensuring their robustness in unstructured real-world environments remains a persistent challenge. In this paper, we investigate test-time scaling through the lens of sampling and verification as means to enhance the robustness and generalization of VLAs. We first demonstrate that the relationship between action error and the number of generated samples follows an exponentiated power law across a range of VLAs, indicating the existence of inference-time scaling laws. Building on these insights, we introduce RoboMonkey, a test-time scaling framework for VLAs. At deployment, RoboMonkey samples a small set of actions from a VLA, applies Gaussian perturbation and majority voting to construct an action proposal distribution, and then uses a Vision Language Model (VLM)-based verifier to select the optimal action. We propose a synthetic data generation pipeline for training such VLM-based action verifiers, and demonstrate that scaling the synthetic dataset consistently improves verification and downstream accuracy. Through extensive simulated and hardware experiments, we show that pairing existing VLAs with RoboMonkey yields significant performance gains, achieving a 25% absolute improvement on out-of-distribution tasks and 9% on in-distribution tasks. Additionally, when adapting to new robot setups, we show that fine-tuning both VLAs and action verifiers yields a 7% performance increase compared to fine-tuning VLAs alone.

@inproceedings{KwokAgiaEtAl2025, author = {Kwok, J. and Agia, C. and Sinha, R. and Foutter, M. and Li, S. and Stoica, I. and Mirhoseini, A. and Pavone, M.}, title = {RoboMonkey: Scaling Test-Time Sampling and Verification for Vision-Language-Action Models}, booktitle = {{Conf. on Robot Learning}}, year = {2025}, month = jul, address = {Seoul, Korea}, keywords = {press}, note = {In press}, owner = {kwok}, timestamp = {2025-07-07}, url = {https://arxiv.org/abs/2506.17811} }